Have you wondered how you stumbled upon that anti-vaccine video on YouTube? How come one innocent click of the video might or might not lead you to an avalanche of more videos that not only tell you that vaccine causes autism but also try to sell you vitamins and magical herbs?

Recently, I completed a study that examined how user behavior and YouTube’s ranking and recommendation algorithms influence the exposure to different kinds of vaccine information.

We explored the different patterns of exposure on YouTube when a person starts with a keyword-based search on the YouTube platform (goal-oriented browsing) and when a person starts with an anti-vaccine video on another website such as Facebook (direct navigation). We simulated the patterns of exposure by creating four networks of videos based on YouTube recommendations.

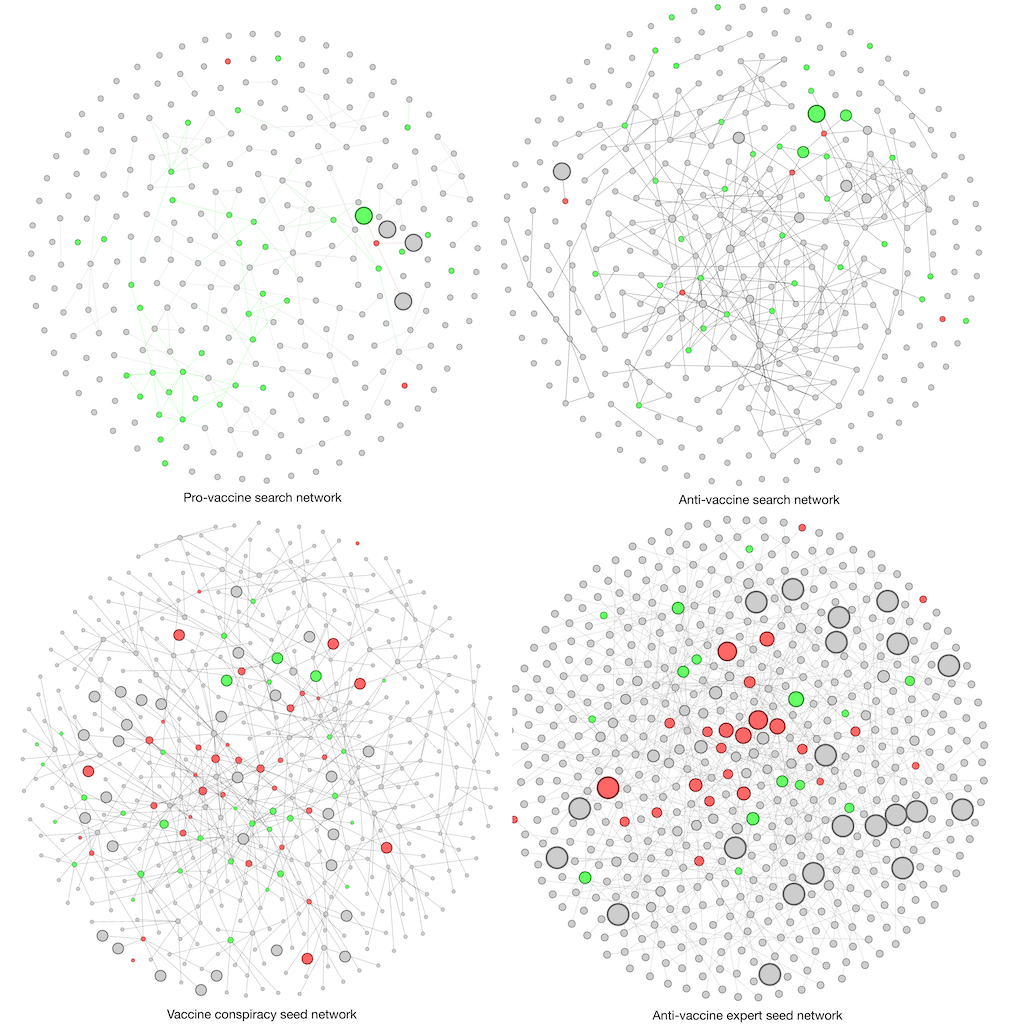

First, we created two search networks, one from a set of pro-vaccine keywords and the other from a set of anti-vaccine keywords. For each network, we collected the first six videos, and for each of these six videos, we further collected six recommended videos, and another six videos for this second layer of videos. Videos collected based on pro-vaccine keywords and anti-vaccine keywords are then put into separate networks. The same procedure was used to create two additional networks starting from two sets of anti-vaccine seed videos. (In the four networks below, green dots represent pro-vaccine videos, red dots represent anti-vaccine videos, and gray dots represent non-vaccine videos.)

What we found was that viewers are more likely to encounter anti-vaccine videos through direct navigation starting from an anti-vaccine seed video (the two networks on the bottom) than through goal-oriented browsing (the two networks on the top).

Why is it the case?

I think it is because YouTube is intentionally suppressing the rankings of anti-vaccine videos in its search research. So you are unlikely to find anti-vaccine video even if you start with some anti-vaccine keywords. However, when you start with an anti-vaccine seed video that you encounter from another platform, such as Facebook, reddit, or your crazy uncle’s email, the protection mechanism YouTube doesn’t work anymore. When you click one anti-vaccine video, YouTube’s recommendation algorithm will show more and more anti-vaccine videos. This is also called the “filter bubble,”

Tang, L., Fujimoto, K., Amith, M., Cunningham, R., Costantini, R.A., York, F., Xiang, G., Boom, J., & Tao, C. (2020). Going down the rabbit hole? An exploration of network exposure to vaccine misinformation on YouTube. Journal of Medical Internet Research. doi: 10.2196/23262